Table of Contents

Transparent Middle Layer (TML) data management infrastructure

The ICE-D project is an attempt to implement a so-called 'transparent middle layer' data management and software infrastructure for cosmogenic-nuclide data. If the problem is that it's hard to do synoptic analysis of a data set that is changing all the time as middle-layer calculations evolve, our idea is that the solution has the following elements:

A data layer: a single source of observational data that can be publicly viewed and evaluated, is up to date, is program- matically accessible to a wide variety of software using a standard application program interface (API), and is generally agreed upon to be a fairly complete and accurate record of past studies and publications, beneath:

A transparent middle layer that calculates geologically useful results, in this case exposure ages, from observational data using an up-to-date calculation method or methods, and serves these results via a simple API to:

An analysis layer, which could be any Earth science application that needs the complete data set of exposure ages for analysis, visualization, or interpretation.

The middle layer is “transparent” just because the calculations are fast enough that the user doesn't notice them as a significant obstacle to analysis or visualization. Overall, the important property of this structure is that only observational data (which do not become obsolete) are stored. Derived data (which become obsolete as soon as the middle-layer calculations are improved) are not stored, but instead calculated dynamically when requested by an analysis application. This eliminates unnecessary effort and the associated lock-in effect created by manual calculations by individual users, and allows continual assimilation of new data or methods into both the data layer and middle layer without creating additional overhead at the level of the analysis application. Potentially, this structure also removes the necessity for redundant data compilation by individual researchers by decoupling agreed-upon observational data (which are the same no matter the opinions or goals of the individual researcher and therefore can be incorporated into a single shared compilation) from calculations or analyses based on those data (which require judgements and decisions on the part of researchers, and therefore would not typically be agreed upon by all users).

The reason the writing style just changed abruptly there is that much of the above paragraph is copied from this paper.

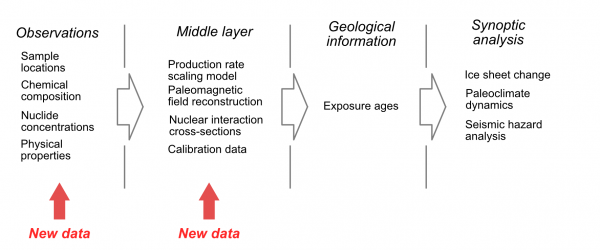

For the special case of cosmogenic-nuclide geochemistry, the general concept is explained (sort of) in this figure from the paper:

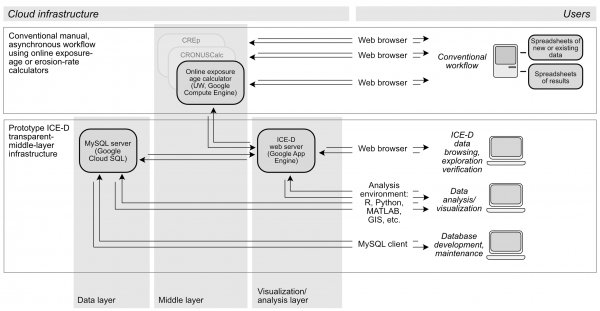

And what that actually translates to in terms of computational infrastructure is explained in this other figure:

If that figure didn't make total sense immediately, here is a more straightforward explanation:

Data Layer

The Data Layer is a relational database running on a MySQL server. It has a pretty standard relational database design based on a hierarchy of sites (a landform or other grouping of samples that should be considered together), samples (which belong to sites), and geochemical measurements of various types (which belong to samples). The data layer page explains how the database is structured.

Middle Layer

The Middle Layer is a set of calculation services that act on raw data pulled from the database (or from anywhere else) and generate derived quantities such as exposure ages. The so-called online exposure age calculator that has been around for a while is one of these services. These are “web services” that can be accessed by any software that wants a set of exposure ages, or whatever, for analysis. The middle layer page lists the known middle-layer applications and links to some info on how they work.

Analysis Layer

An Analysis Layer application is anything that takes a set of raw cosmogenic-nuclide data, exposure ages derived from that data, or both, and displays or uses it in some way. The ICE-D website is an example analysis layer application.